A View Conference 2025 Case Study from BOT VFX

AI at the Director’s Table

What if creativity had a new co-pilot? Imagine directing not just a cast and crew, but an orchestra of algorithms—responsive, adaptable, and capable of amplifying your vision frame by frame. That’s no longer a sci-fi fantasy: it’s the new frontier explored at View Conference 2025, where Srikanth S, Senior Technical Supervisor at BOT VFX, unveiled what happens when GenAI steps onto a real-world production pipeline.

The Project: Trace of One—Fashion Meets AI

At the heart of Srikanth’s talk was Trace of One, an experimental short film for fashion designer Mai Gidah. Here, every pixel sprang from generative AI, but every choice—from character pose to camera movement—remained in the hands of human creatives. The team set a high bar: could these new tools deliver outcomes strong enough to satisfy supervisors, clients, and brand storytellers in serious production?

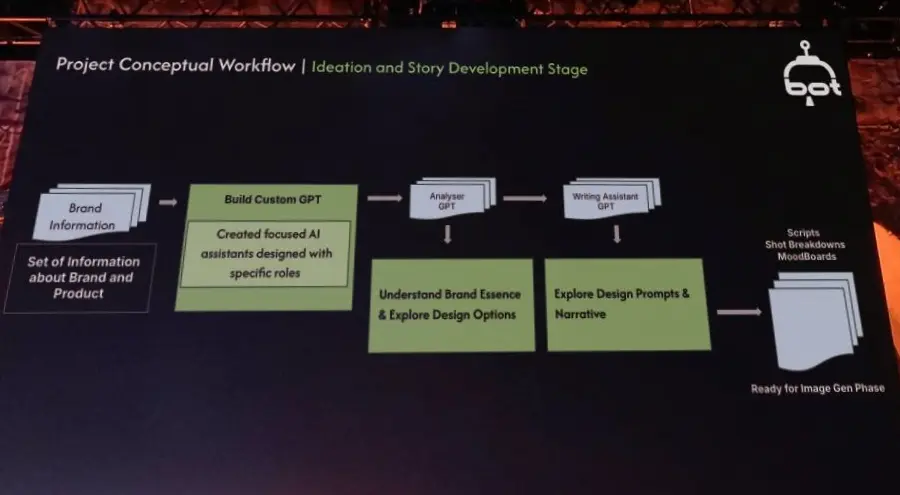

Building the Workflow: Ideas to Final Frames

Production started with big ideas, swiftly supported by GPT-powered assistants. These language models generated drafts and mapped out story structure, working like junior creative collaborators that never overstepped the human impulse for direction and editing.

For visuals, things got hands-on. The team conducted a real photoshoot to build a data set of fashion models and outfits, trained LoRA models to secure talent and wardrobe consistency, and validated these assets just like you would for key VFX elements—testing lighting, angles, and composition before rolling into full shots and scenes.

Motion and pacing followed the traditions of film. The artists generated image-to-video takes for each shot, then stitched and refined them using editorial processes that made the final 4K master feel crafted, not random

Insights from the Session: Structure, Control, Adaptability

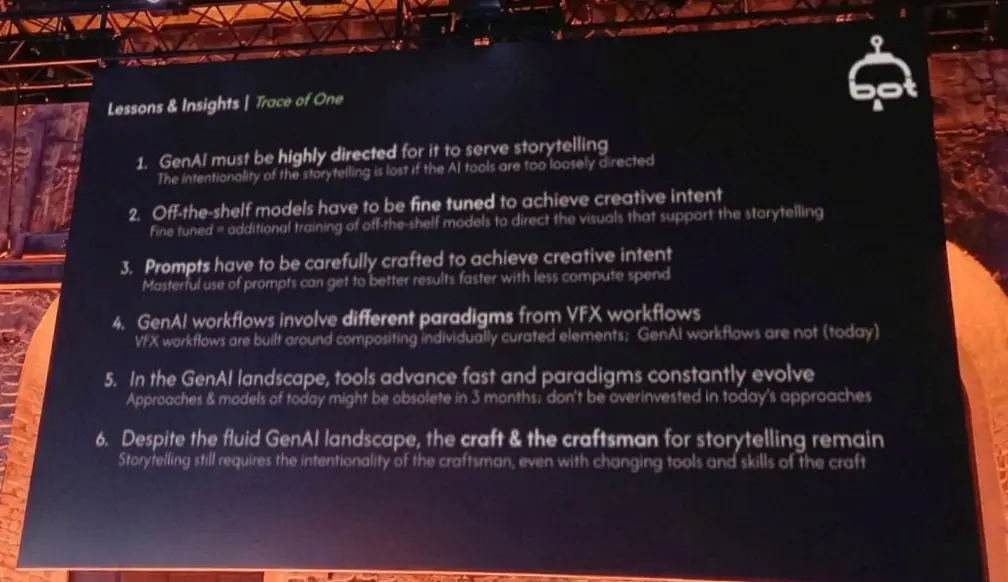

Attendees at View Conference saw firsthand that GenAI isn’t a magical shortcut. LLMs and generative image models are most powerful when directed with clear intent, acting more like creative partners than generic prompt machines. The excitement wasn’t in flashy outputs, but in blending real product, character design, and branded storytelling with the discipline of a production crew.

Throughout the talk, questions circled around control and adaptability—how does a director keep narrative and style consistent when new AI tools and features arrive mid-project? Srikanth’s answer was practical: flexibility, big-picture thinking, and ongoing iteration are the real sources of competitive edge.

Lessons for Every Audience

The Trace of One journey drove home several essential points. GenAI needs a director, not just a button-pusher. Off-the-shelf models won’t recognize your brand story unless you invest in training and refinement. Prompts work best as detailed stage directions, guiding action, mood, and style. AI workflows demand iterative thinking, not just modular tweaks, and adaptability must become a core strength as technology evolves.

Most of all, the team found that while tools might change, intent and judgment remain central. AI can expand horizons, accelerate work, and introduce new types of creativity, but only when the people behind the screen steer the process with vision and care.

For anyone in VFX, film, fashion, or brand work, the future lies in partnerships—between humans and machines, between vision and execution. At BOT VFX, Trace of One stands as proof that with the right direction, GenAI can help create compelling stories, remarkable visuals, and original work that reflects not just possibility, but purpose.